What Is A/B Testing and Why Does It Matter?

A/B testing, also known as split testing, is a systematic experiment that empowers marketers to evaluate different versions of a marketing asset, such as an email, landing page, or advertisement. The objective is to identify the version that excels in achieving specific goals, whether it's boosting click-through rates, increasing conversions, or enhancing engagement.

Refining Your Strategy

A/B testing is a crucial element in refining marketing strategies, allowing you to make informed choices with actual user data. This ensures that your actions are in sync with your audience's preferences. Through ongoing testing and optimization, you can enhance the efficiency of your campaigns to the fullest extent.

Choosing Elements for Testing

A/B testing has shown that various elements can have different effects on your campaign's performance. The goal is to pinpoint the elements most likely to influence your campaign's effectiveness. These elements include:

- Headlines: Experiment with different headline variations to captivate your audience from the outset.

- CTAs (Call to Actions): Optimize conversion rates by testing different button text, colors, and placements.

- Visuals: Determine which images and videos resonate most effectively with your audience.

- Copy: Enhance engagement and conversions by testing different messaging styles.

- Layout and Design: Elevate user experience through experimentation with various campaign layouts.

- Audience Segmentation: Leverage A/B testing for audience targeting to fine-tune your campaign for distinct customer segments.

Setting Clear Objectives

Before embarking on A/B testing, you must first define your goals and key performance indicators (KPIs). This entails articulating what you aim to accomplish with the test and identifying which metrics hold the utmost importance for your campaign's success.

It's imperative to ensure that your objectives are in harmony with your overarching marketing strategy, guaranteeing that A/B testing seamlessly contributes to your goals.

Creating Test Variations

In crafting variations for A/B testing, it is vital to maintain consistency while introducing changes. Here are some best practices to uphold:

Modify one element at a time:

This approach simplifies the process of pinpointing the change that leads to enhanced performance.

Maintain distinct differences between variations:

Subtle alterations may not yield significant insights.

Document the changes implemented:

Keeping track of the alterations in each variation facilitates easy reference.

Executing the Test

With your testing parameters and objectives in place, it's time to put your A/B test into action. Follow these steps:

-

Choose a Testing Platform

Select a reputable A/B testing platform tailored to your needs. Prominent choices encompass Google Optimize, Optimizely, and Unbounce.

-

Establish Variations

Through your chosen platform, configure the variations you wish to test, ensuring random display to your audience.

-

Form Control and Treatment Groups

Divide your audience into two groups, namely the control group (A) and the treatment group (B). The control group encounters the existing version, while the treatment group is exposed to the new variation.

-

Run the Test

Allow the test to operate for a predefined duration to amass a statistically significant volume of data, thereby ensuring the reliability of your results.

-

Monitor and Collect Data

Consistently monitor the test's progress, while collecting data on pivotal metrics like click-through rates, conversions, and engagement.

-

Analyze Results:

Upon conclusion of the test, meticulously scrutinize the results to discern the superior-performing variation. Employ statistical significance to validate the accuracy of your findings.

Interpreting Results

The interpretation of A/B test results is where the transformation occurs. Here's how you can derive meaning from the data:

Compare Key Metrics:

Analyze the performance of the control and treatment groups concerning your predefined KPIs.

Seek Statistically Significant Differences:

Confirm that the improvements observed are not mere chance but are statistically significant.

Draw Actionable Insights:

Utilize the results as a compass for your marketing decisions, allowing data-driven enhancements.

Iterative Testing and Continuous Improvement

A single instance of A/B testing is insufficient to maintain your competitive edge in the dynamic marketing landscape. Embracing a culture of perpetual experimentation and optimization is paramount. Here are strategies to leverage A/B testing for sustained success:

- Schedule regular A/B tests to continually enhance your marketing materials.

- Ensure that your A/B testing hypotheses and objectives remain aligned with your evolving marketing strategy.

- Document your findings and learn from prior tests to steer future campaigns.

Case Studies and Success Stories

Let's delve into real-world examples of businesses that achieved remarkable improvements through A/B testing:

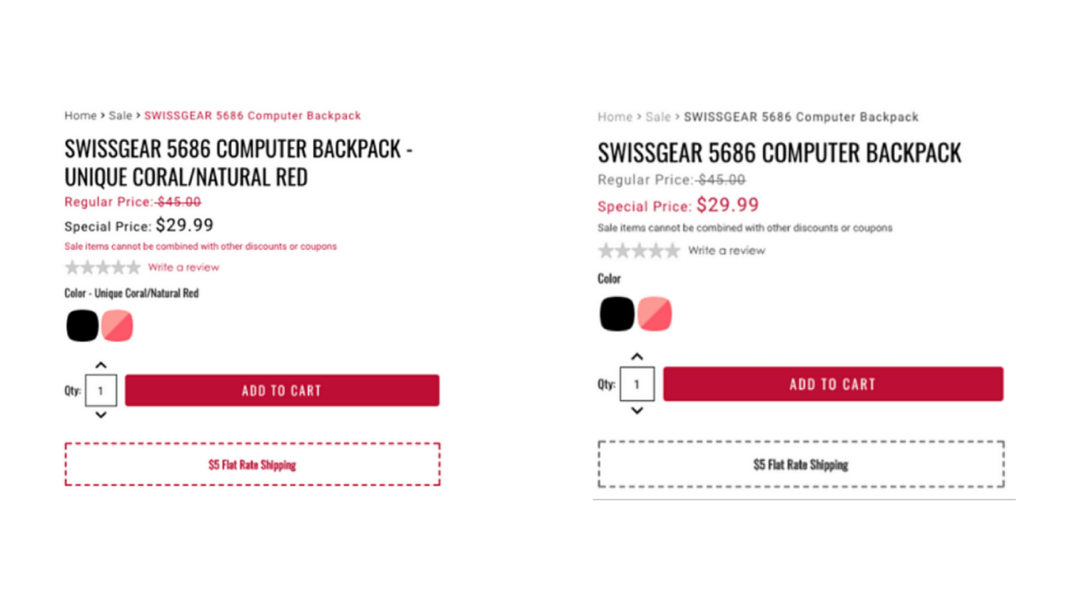

Case Study 1: Swiss Gear’s Product Page Test

In an effort to improve their product page and drive conversions, Swiss Gear conducted an experiment. The original product page featured a combination of red and black elements that lacked clear focal points, while the variant highlighted key elements in red, such as the 'special price' and 'add to cart' sections, making critical information readily visible. These subtle modifications resulted in a significant 52% increase in conversions, with an even more impressive 132% boost during the holiday season.

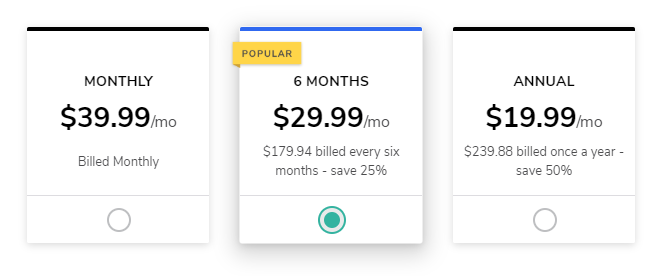

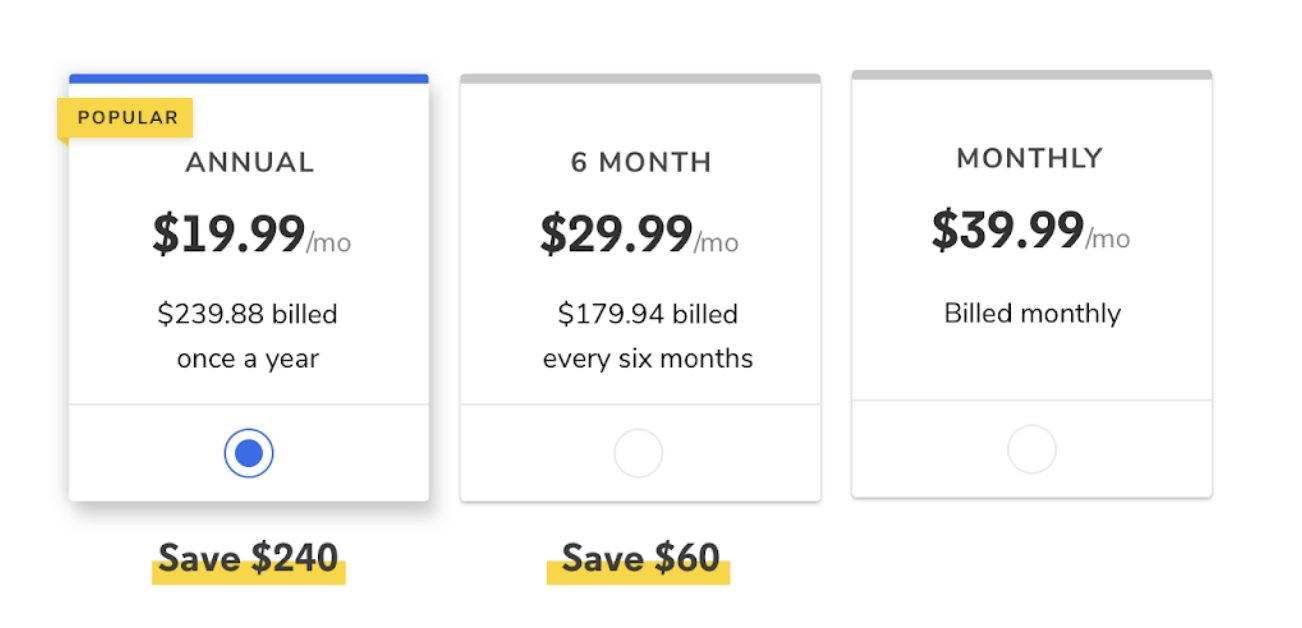

Case Study 2: Codecademy’s Pricing Test

Codecademy conducted an A/B test on their pricing display, comparing the original pricing page with a variation. They applied the 'Rule of 100' psychological principle, displaying the dollar amount saved on the annual plan instead of a percentage. This test yielded a notable 28% increase in annual Pro plan sign-ups and a slight boost in overall page conversions.

Common Pitfalls and How to Avoid Them

Although A/B testing is a powerful tool, it's important to recognize that approximately 30% of A/B tests are not correctly analyzed. Here are some common pitfalls to be mindful of:

- Testing too many variables at once can lead to inconclusive results. Stick to one change at a time.

- Ignoring statistical significance can lead to erroneous conclusions. Ensure your results are reliable.

- Neglecting the importance of a large enough sample size can skew your findings. Gather enough data to draw meaningful insights.

Tools and Resources

To succeed in A/B testing, you'll need the right tools and resources. Here are recommendations for marketers at various skill levels:

For Beginners:

Google Optimize offers a user-friendly interface for setting up and running A/B tests.

For Intermediate users:

Optimizely provides advanced features for more complex testing scenarios.

For Advanced users:

Custom coding and data analysis tools can be employed to create highly customized A/B tests.

In marketing, success hinges on making informed decisions. A/B testing is a powerful tool that empowers you to make data-driven decisions, improve campaign performance, and drive higher ROI. When conducting A/B testing by choosing elements wisely, setting clear objectives, creating meaningful variations, and continually iterating, you can refine your marketing strategies to achieve optimal results. Remember, A/B testing is an art, and mastering it can be the key to your marketing success. So, start testing, learning, and optimizing today to elevate your marketing game and stay ahead of the competition.

images/bannerimages/Blog-Banner.jpg

images/bannerimages/Blog-Banner.jpg